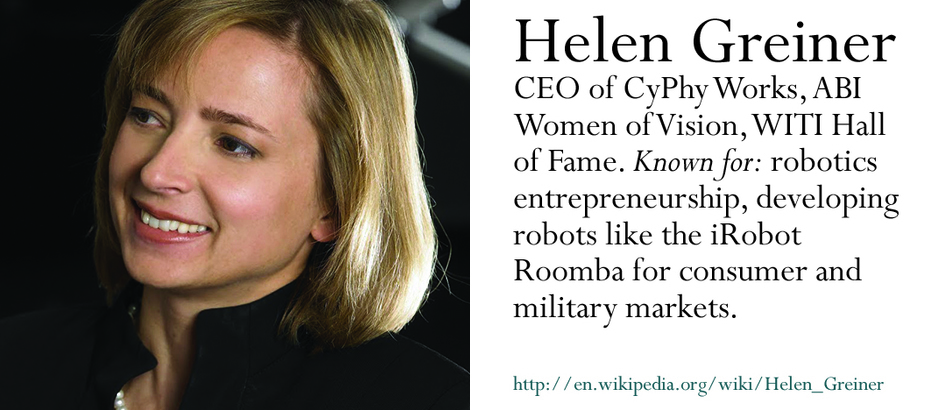

Technical Women

Today

-

Review address translation.

-

Page translation and the TLB.

$ cat announce.txt

-

This is it! The last week for ASST2!

-

A reminder: 421/521 assignments are cumulative and we do not distribute solution sets without penalty.

-

-

Midterm will cover everything through Friday.

-

ASST2 turn-in party Friday starting after class, in and around Davis 303.

-

A leader board is coming! (And it includes privacy settings, obviously.)

PS: ASST2 and ASST3 Are Hard

-

Hopefully you’ve realized this by now.

Turns out ASST2

Is all of Vol 1

Efficient Translation

Goal: almost every virtual address translation should be able to proceed without kernel assistance.

-

The kernel is too slow!

-

Recall: kernel sets policy, hardware provides the mechanism.

Implicit Translation

-

Process: "Machine! Store to address 0x10000!"

-

MMU: "Where the heck is virtual address 0x10000 supposed to map to? Kernel…help!"

-

(Exception.)

-

Kernel: Machine, virtual address 0x10000 maps to physical address 0x567400.

-

MMU: Thanks! Process: store completed!

-

Process: KTHXBAI.

K.I.S.S.: Base and Bound

Simplest virtual address mapping approach.

-

Assign each process a base physical address and bound.

-

Check: Virtual Address is OK if Virtual Address < bound.

-

Translate: Physical Address = Virtual Address + base

Base and Bounds: Cons

-

Con: Not a good fit for our address space abstraction which encourages discontiguous allocation. Base and bounds allocation must be mostly contiguous otherwise we will lose memory to internal fragmentation.

-

Con: also significant chance of external fragmentation due to large contiguous allocations.

K.I.Simplish.S.: Segmentation

-

Multiple bases and bounds per process, each called a segment.

-

Each segment has a start virtual address, base physical address, and bound.

-

Check: Virtual Address is OK if it inside some segment, or for some segment:

Segment Start < V.A. < Segment Start + Segment Bound. -

Translate: For the segment that contains this virtual address:

Physical Address = (V.A. - Segment Start) + Segment Base

Segmentation: Cons

-

Con: still requires entire segment be contiguous in memory!

-

Con: potential for external fragmentation due to segment contiguity.

Let’s Regroup

-

Fast mapping from any virtual byte to any physical byte.

-

Operating system cannot do this. Can hardware help?

Translation Lookaside Buffer

-

Common systems trick: when something is too slow, throw a cache at it.

TLB Example

What’s the Catch?

-

CAMs are limited in size. We cannot make them arbitrarily large.

-

Segments are too large and lead to internal fragmentation.

-

Mapping individual bytes would mean that the TLB would not be able to cache many entries and performance would suffer.

-

Is there a middle ground?

Pages

Modern solution is to choose a translation granularity that is small enough to limit internal fragmentation but large enough to allow the TLB to cache entries covering a significant amount of memory.

-

Also limits the size of kernel data structures associated with memory management.

Execution locality also helps here: processes memory accesses are typically highly spatially clustered, meaning that even a small cache can be very effective.

Page Size

-

4K is a very common page size. 8K or larger pages are also sometimes used.

-

4K pages and a 128-entry TLB allow caching translations for 512 KB of memory.

-

You can think of pages as fixed size segments, so the bound is the same for each.

Page Translation

-

We refer to the portion of the virtual address that identifies the page as the virtual page number (VPN) and the remainder as the offset.

-

Virtual pages map to physical pages.

-

All addresses inside a single virtual page map to the same physical page.

-

Check: for 4K pages, split 32-bit address into virtual page number (top 20 bits) and offset (bottom 12 bits). Check if a virtual page to physical page translation exists for this page.

-

Translate: Physical Address = Physical Page + offset.

A Brief Interlude

TLB Example

Assume we are using 4K pages.

TLB Management

-

The operating system loads them.

-

The TLB asks the operating system for help via a TLB exception. The operating system must either load the mapping or figure out what to do with the process. (Maybe boom.)

Paging: Pros

-

Maintains many of the pros of segmentation, which can be layered on top of paging.

-

Pro: can organize and protect regions of memory appropriately.

-

Pro: better fit for address spaces. Even less internal fragmentation than segmentation due to smaller allocation size.

-

Pro: no external fragmentation due to fixed allocation size!

Paging: Cons

-

Con: requires per-page hardware translation. Use hardware to help us.

-

Con: requires per-page operating system state. A lot of clever engineering here.

Page State

-

Store information about each virtual page.

-

Locate that information quickly.

Page Table Entries (PTEs)

We refer to a single entry storing information about a single virtual page used by a single process a page table entry (PTE).

-

(We will see in a few slides why we call them page table entries.)

-

Can usually jam everything into one 32-bit machine word:

-

Location: 20 bits. (Physical Page Number or location on disk.)

-

Permissions: 3 bits. (Read, Write, Execute.)

-

Valid: 1 bits. Is the page located in memory?

-

Referenced: 1 bits. Has the page been read/written to recently?

-

Locating Page State

-

Process: "Machine! Store to address

0x10000!" -

MMU: "Where the heck is virtual address

0x10000supposed to map to? Kernel…help!" -

(Exception.)

-

Kernel: Let’s see… where did I put that page table entry for

0x10000… just give me a minute… I know it’s around here somewhere… I really should be more organized!

Next Time

-

Page table data structures.

-

How to allocate more memory than we have.

-

And how to make it not terrible.